It was only after hours of searching that I finally came up with what I was looking for: a way to take a polygon mesh (OBJ or similar) and convert it into a blueprint for building LEGO sculptures.

Don’t get me wrong, there are tons of tools out there for LEGO CAD. But strangely none of them mention being able to go from a mesh to a LEGO layout. It’s surprising, since it seems like such a natural fit. The rise of 3D printers has rejuvinated interest in voxels, voulmetric pixels, and as evidenced by all the LEGO sculpture artists we seem to be in a golden age of LEGO.

Armed with Blender and a giant LEGO collection, I set out to get the computer to do the hard work for me. I used Blender, graph paper, a pencil, and of course lots of LEGOs.

Step 1: Voxelizing a Utah teapot

Let me preface this by saying that the Blender UI is not for the faint of heart. I took classes on Rhino and 3DSMax in college, and thought to myself “how different could it be?” The answer: very. If you’re new to blender, don’t fear the manual. You’re going to need it, particularly the parts on installing/using python scripts.

To voxelize the teapot I used a script called Add Cells which covers the surface of any object with any other object. First I imported the teapot, and scaled it up a bit. Then I created my “fundamental unit” of LEGO. LEGOs have an aspect ratio of 6:5, so I created a 1×1 LEGO, a 0.6×0.5×0.5 rectangular prism in Blender.

Selecting both the teapot and my 1×1 lego I ran the Add Cells script (go to the Scripts menu –> Add -> Cells). I chose the Teapot for my object to be voxelized and the 1×1 LEGO as my voxel model.

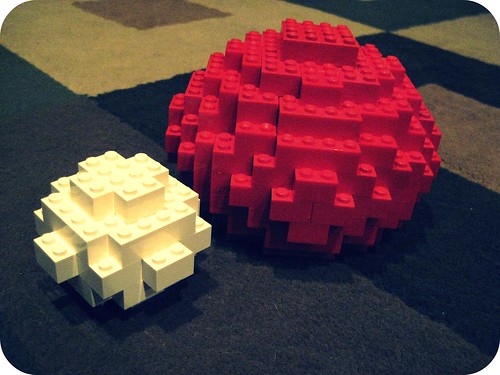

Tada! A blocky teapot!

Step 2: Graphing each layer on paper

In order to make the build process easier, I went through layer by layer and drew a map of each layer on graph paper. This way when building with LEGOs I could shade in with a pencil each voxel I’d built. It sounds redundant, but when things all start looking the same after a few minutes and something isn’t lining up, it’s very helpful.

To see one layer at a time in Blender I went into Sculpture Mode, side view, and used ctrl+shift+right mouse to select and hide all but the layer I wanted to see. Then I switched to Top view and copied the layer onto my graph paper. By the end I had a sheet of paper full of wobbly circular outlines.

Step 3: Building it with LEGOs!

The completed model uses 244 LEGOs, many of which are tiny 1×1 and 1×2 bricks. The model is hollow, but the walls need to be fairly thick to be able to support the top. As it is I probably should have made things a little thicker; putting the last two layers on was a delicate operation.

I built each layer sequentially. There were a few overhang pieces near the bottom which I had to append to the layer above them, since they couldn’t anchor to anything below.

Overall the project took about 4 hours, with a break in the middle for breakfast, church, etc.

Total LEGO count for the project was 244 individual bricks, distributed thusly:

- 44 2×3 Bricks

- 46 2×2 Bricks

- 58 2×4 Bricks

- 27 1×2 Bricks

- 17 1×3 Bricks

- 8 1×4 Bricks

- 8 2×2 L shaped Bricks

- 33 1×1 Bricks

- 1 2×8 Brick

- 1 2×6 Brick

- 1 1×8 Brick