This is part two of our home theater PC adventure. If you’ve just arrived you may want to start with part 1, Hello Xbox 360 Wireless Gaming Receiver.

Hooray, Windows can finally see the controller. We fire up the control panel tool for gaming devices and confirm that all buttons and axes work. We’re just about there! XBMC even helpfully includes keymappings for the xbox controller by default! We should be pretty much plug-and-play from here! I open up XBMC and…. nothing. The controller does nothing. I smash all the buttons, and still nothing.

The first step in debugging is to open up the log file and see what’s going on. The log file starts fresh every time you boot XBMC, and if you’re lucky you’ll see a line like this shoved in there:

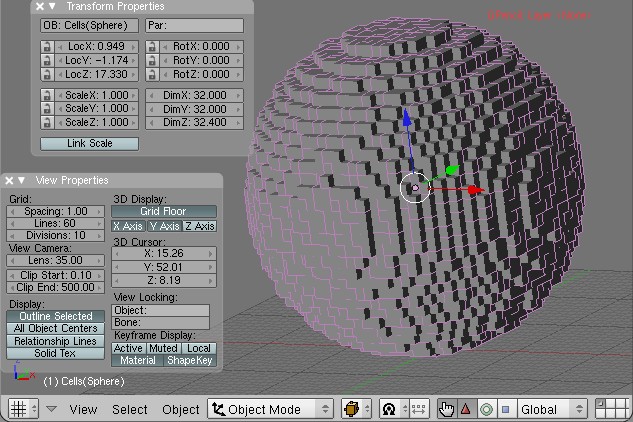

19:00:17 T:2904 M:876916736 NOTICE: Enabled Joystick: Controller (XBOX 360 For Windows)

This means that XBMC can see the controller. It also tells us that it thinks the controller is named “Controller (XBOX 360 For Windows)” and depending on your OS and a few other seemingly random factors, it may be named something different.

This name is critically important, because it’s how XBMC knows which keymapping profile to pick up. When I went into the XBMC system/keymappings folder and looked at the existing 360 controller profiles, none of them was an exact name match. So I copied one of them and pasted it into the user keymappings folder.

The next step is to go into our copy and replace

I’m not sure exactly what happened, at some point while I was messing around with all of this I managed to un-pair the controller from the PC without realizing it. After much cursing and whining, “why doesn’t it woooooork,” I realized what had happened and rebooted things.

Once I had renamed the joystick and it was actually communicating with the PC, I fired up XBMC and something magical happened: both the A and B buttons were functioning as Select and Back, respectively. Hooray! And the right analog stick was working as a volume control (though the axis was inverted). Not much else seemed to do anything, but IT WAS WORKING. Hooray!

The next task was getting the D-pad to work for navigating menus. Let me take this time to say that like most people who grew up with Nintendo controllers, the d-pad on the Xbox 360 controller is a source of scorn and hatred. But I wasn’t quite ready to tackle the analog stick, so the d-pad would have to do.

At this point I turned on debugging in XBMC and then proceeded to methodically press every button on the controller (and swivel each stick axis) exactly once. This worked great for the buttons but none of the axis data showed up in the debugger at all. Great. The keymap xml file I copied incorrectly identified the d-pad as “buttons” when it is in fact a “hat” according to Windows, so once I replaced the “button” nodes with “hat” nodes I was able to map the directions on the d-pad to Up/Down/Left/Right commands.

I should mention that I spent a lot of time googling about my problem, and mostly found forum threads where one person said “my xbox controller doesn’t work” and another person said “use xpadder,” or someone says “the d-pad doesn’t seem to work” and again the reply is “use xpadder.” After reading that response in about 20 threads, I was really starting to think the whole system was just too bugged to be viable. But in reality, people on the xbmc forums just aren’t willing to get their hands dirty.

Only in the process of writing up this post did I find someone who had actually taken the time to map out which buttons, hats, and axes where which. I wish I had found that post last night, it would have saved me about two hours.

I’m still deciding what the analog sticks should do, and trying to figure out how to get the controller to turn off when I’m done, but we got things to the point where it was good enough to navigate around to Dexter, and that was enough for one night. And I will say, navigating with the Xbox controller feels nice, much nicer than breaking out the clunky keyboard.